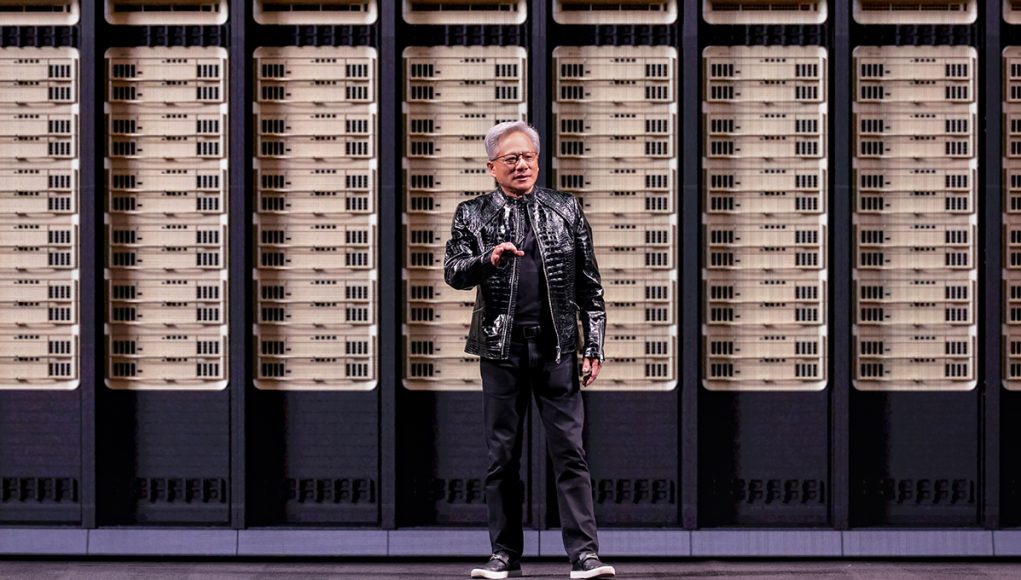

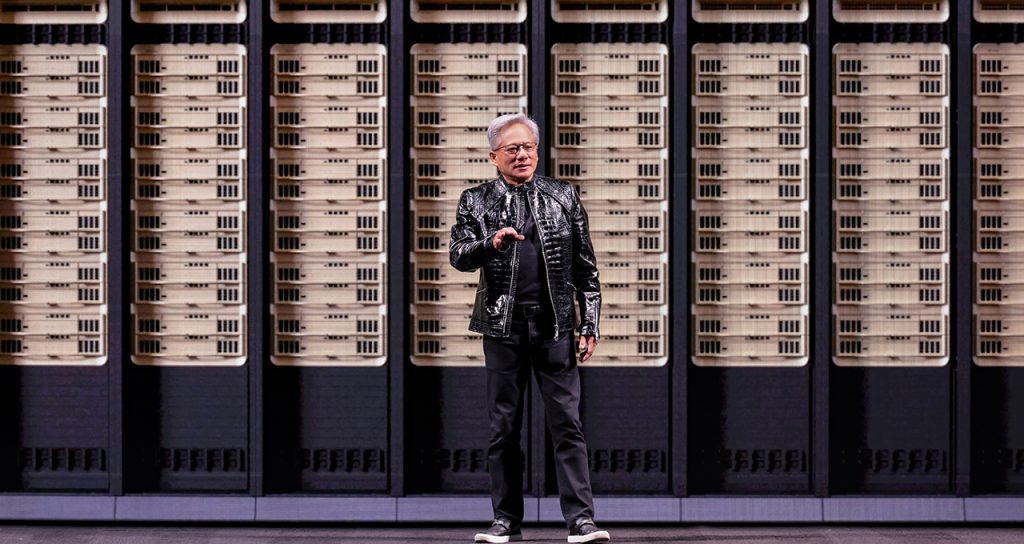

(Singapore, 06.01.2026)Nvidia started 2026 with a confident message to the technology world, signaling that the artificial intelligence boom is far from slowing down. At the CES technology show in Las Vegas, the company revealed fresh details about its next-generation Rubin chips, growing demand from China, and new AI tools that could reshape autonomous driving and robotics.

Speaking at CES, Chief Executive Officer Jensen Huang said Nvidia’s highly anticipated Rubin data center processors are now in production and on track for customer use in the second half of the year. All six chips that make up the Rubin platform have returned from manufacturing partners, clearing a key milestone in Nvidia’s product roadmap.

Huang said demand for the new chips is already very strong, driven by the rapid rise in artificial intelligence software. As AI systems become more complex and capable, they require far more computing power than existing infrastructure can provide. This growing strain on data centers, he explained, is pushing customers to invest in more advanced hardware.

Rubin represents a major performance leap over Nvidia’s current Blackwell platform. The company says the new chips are about 3.5 times faster at training AI models and five times more powerful when running them. This improvement is increasingly important as AI shifts toward more advanced “reasoning” systems, which solve problems through multiple steps rather than producing simple responses.

The Rubin platform also introduces a new central processing unit with 88 cores, roughly doubling the performance of the component it replaces. Combined with new networking and connectivity technologies, Rubin will power Nvidia’s next DGX SuperPod supercomputers. At the same time, customers will be able to buy the chips individually and deploy them in more flexible, modular setups.

Beyond raw performance, Nvidia emphasized that Rubin-based systems will be more cost-efficient. Because fewer components are needed to achieve the same results, customers can lower energy consumption and operating costs, an important consideration as data centers expand around the world.

While Nvidia continues to lead the AI accelerator market, competition is intensifying. Rivals are developing alternative chips, and major cloud providers are increasingly building their own custom processors. Some investors have also questioned whether spending on AI infrastructure can keep growing at its current pace. Despite this, Nvidia remains optimistic, repeatedly pointing to a long-term AI market that could be worth trillions of dollars.

To maintain momentum, Nvidia has chosen to reveal product details earlier than usual. Instead of waiting for its annual GTC conference in Silicon Valley, the company used CES to keep customers and partners focused on its latest technology. At the same time, Huang stressed that earlier generations of Nvidia chips are still performing well and remain in high demand.

China in Focus as H200 Approval Looms

China was another key topic at the event. Nvidia said it has seen strong interest from Chinese customers for its H200 chip, which the US government is considering allowing Nvidia to ship under a licensing system. Chief Financial Officer Colette Kress said license applications have already been submitted and are now under review.

Kress added that Nvidia has enough supply to serve customers in China without affecting shipments to other regions, regardless of how the licensing process unfolds. However, Nvidia would still need approval from Chinese authorities. In the past, Beijing discouraged the use of an earlier, less powerful chip design known as the H20, adding uncertainty to future sales.

For now, the bulk of spending on Nvidia-powered systems comes from a small group of major customers, including Microsoft, Google Cloud, and Amazon Web Services. These cloud giants are expected to be among the first to deploy Rubin-based systems later this year.

At the same time, Nvidia is working to broaden AI adoption across the wider economy. Alongside its chip announcements, the company unveiled new AI models and tools designed to accelerate the development of autonomous vehicles and robots.

One of the highlights was a vehicle platform called Alpamayo, which allows cars to “reason” about real-world situations. Using data from cameras and sensors, an onboard computer breaks problems into steps and determines how to respond, helping vehicles deal with unexpected events such as traffic signal failures. Nvidia said the platform will be offered free, allowing automakers to retrain it for their own use.

Building on existing partnerships, including work with Mercedes-Benz, Nvidia aims to bring more advanced hands-free driving to highways and eventually complex city environments. Huang said the first Nvidia-powered cars will appear on US roads in the first quarter, followed by Europe in the second quarter and Asia later in the year. He also repeated his long-term vision of a future where autonomous vehicles are widespread.

Nvidia also introduced new AI technology for robots and said it is expanding cooperation with Siemens to bring AI deeper into factories and other industrial settings.

With Rubin chips on schedule, strong interest from global customers, and new tools pushing AI into the physical world, Nvidia used CES to send a clear message: the company intends to remain at the center of the next phase of the AI revolution.