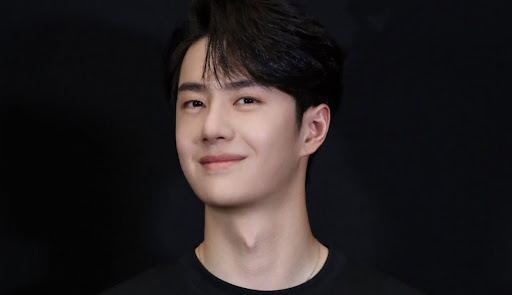

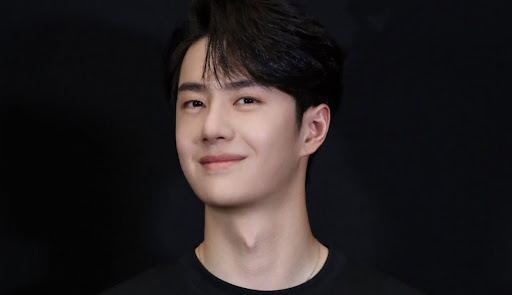

(11.07.2025, SINGAPORE)An AI hallucination case in China involving the country’s most well-known AI company DeepSeek and celebrity Wang Yibo has caused many to be sceptical about information provided by local AI systems especially DeepSeek as they seem liable to be manipulated to provide false, misleading, or nonsensical contents, reported the Chinese media.

Meanwhile, DeepSeek, once hailed as a “low-cost disruptor” that shook the global AI market, is now facing an unprecedented crisis. According to data, its user engagement rate, the metric measuring how actively users interact with DeepSeek, over a specific period. has plummeted from a peak of 50% earlier this year to just 3% now, and traffic to its official website has dropped by over 70% year-on-year.

Technical shortcomings, lack of ecosystem development, and strategic misjudgments are identified as the three major burdens pulling down this once-celebrated company. But AI hallucination is the one trait that users find most intolerable, noted an expert in the MSN news website.

The Wang Yibo incident reached a high point when many Chinese mainstream medias published articles about Wang’s name being cleared of associations with a sordid corruption case. They cited DeepSeek’s apology for its AI model’s baseless connection of the 27-year-old to the scandal days earlier. DeepSeek claimed it had been wrongly influenced by unsubstantiated rumors and user queries.

In its apology DeepSeek announced that it permanently withdrew the inaccurate content and even referenced a criminal verdict issued by a Beijing court that exonerated Wang, one of China’s top-tier celebrities. Wang boasts a successful and diverse career encompassing dancing, variety shows, and films and television, and has amassed a huge fan base and many brand endorsement contracts.

However, that is only the beginning of another twist to the saga. The news of a well-known domestic AI unicorn apologizing to a celebrity naturally sparked massive media coverage. However, when people checked DeepSeek’s official website in its WeChat account and its overseas social media profiles, no trace of such a statement could be found.

The truth is that the apology was generated by DeepSeek itself, reported 36 Kr, a Chinese media platform focused on technology and entrepreneurship. In fact, every screenshot circulating online showing the “DeepSeek apology” was content produced by DeepSeek’s AI model in response to the prompts of Wang’s fans.

Therefore, it was determined that the apology was sheer AI-generated content, not an official statement from DeepSeek’s parent company, Hangzhou DeepSeek Technology. Since AI models cannot bear legal responsibility, they are not liable for defamation under China’s current legal system.

Wang’s fans treating AI as the spokesperson of a tech company is pure cyberpunk, remarked 36 Kr. The case started on June 30 when verdict was delivered on the high-profile corruption case of Li Aiqing, branded “the most corrupt SOE (state-owned enterprise) official in Beijing.” Li, former Party Secretary and Chairman of Beijing Capital Group, was sentenced to death with reprieve for two years for crimes of bribery and abuse of power.

Li was also accused of using his power to obtain sexual favors from a number of men. When his transgressions first came to light in 2022, rumors emerged claiming that a top-tier celebrity was involved. Some detractors linked Wang to it, but his agency had issued a denial that year. As the case resurfaced this year, some people reignited the old rumor, provoking Wang’s fans to make use of DeepSeek.

. The fabricated statement looked convincing as it included elements such as “information deleted across the internet,” “apology filed with a notary,” and “compensation plans initiated.” All of these offered a legalistic and scrupulous look, enough to be taken seriously.

This case of AI illusion highlights two major problems with today’s large AI models: their outputs are based on training data, not real understanding, essentially parroting plausible texts; and the public’s blind trust in AI, which allows such tools to become sources of false information, lamented 36 Kr.

Moreover, to “flatter” users and due to the inherent flaws of “reinforcement learning from human feedback” (RLHF), large AI models tend to produce outputs that align with the user prompts. This leads to AI just going along with what the users want, 36 Kr added.

Meanwhile, according to the latest report by international semiconductor research firm Semianalysis, DeepSeek’s global user engagement rate has plummeted from 50% in January 2025 to just 3% in July. Website traffic dropped by 63% between February and May. Statistics from another AI model integration platform, Poe, show that usage of DeepSeek’s core model R1 was halved between February and April, falling from 7% to 3%.

In contrast, usage of ChatGPT and Google Gemini increased by 40.6% and 85.8% respectively during the same period.

Even more alarming is that DeepSeek’s decline has far exceeded industry expectations. In February this year, its monthly visits once surpassed ChatGPT with 525 million, capturing a 6.58% share of the global market and becoming the fastest-growing AI tool. However, by May, its daily active user share had plummeted from a peak of 25% to just 3%, with user attrition resembling a “free fall.”

One complaint is that hallucinations run rampant in DeepSeek. When handling specialized topics such as law and medicine, DeepSeek frequently fabricates false information. Third-party testing showed DeepSeek’s hallucination rate to be as high as 52%, far above the industry average of 30%.

“The lesson from DeepSeek shows that the key of AI success lies in commercialization strategy after technological development,” noted Zhang Bo, director of the AI Research Institute at Tsinghua University. “In the next three years, 80% of large model companies will be eliminated, and only those players with the ability to develop an ecosystem will survive.”