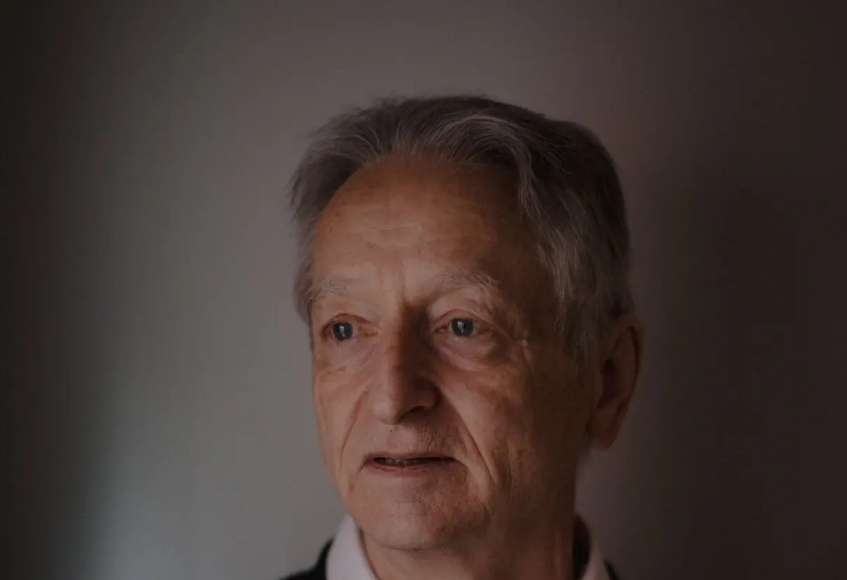

(Singapore 2 May 2023)The danger of Artificial Intelligence lies in its ability of not only creating misinformation to cheat average people but also killer robots that are even more difficult to manage than nuclear weapons, according to Geoffrey Hinton, often called “the Godfather of AI”.

The 75-year-old British expatriate and AI pioneer has made such remarks after quitting his job as a chief scientist at Google where he worked for more than a decade and became one of the most respected voices in the field.

“It is hard to see how you can prevent the bad actors from using it for bad things,” Hinton said as quoted by a New York Times report.

According to the report, he officially joined a growing chorus of critics who say those companies are racing toward danger with their aggressive campaign to create products based on generative artificial intelligence, the technology that powers popular chatbots like ChatGPT.

In fact, after the San Francisco start-up OpenAI released a new version of ChatGPT in March, more than 1,000 technology leaders and researchers signed an open letter calling for a six-month moratorium on the development of new systems because AI technologies pose “profound risks to society and humanity.”

A few days later, 19 current and former leaders of the Association for the Advancement of Artificial Intelligence released their own letter warning of the risks of AI.

Although Hinton did not sign either of those letters, he notified the company last month that he was resigning. And he reportedly quit Google in order to freely speak out about the risks of AI.

His immediate concern is that the internet will be flooded with false photos, videos and text, and the average person will “not be able to know what is true anymore”, the report says.

He is also worried that AI technologies will in time upend the job market as they could replace paralegals, personal assistants, translators and others who handle rote tasks. “It takes away the drudge work,” he said. “It might take away more than that.”

An even worse issue of the technology is that individuals and companies allow AI systems not only to generate their own computer code but actually run that code on their own. And he fears a day when truly autonomous weapons — those killer robots — become reality.

Unlike with nuclear weapons, there is no way of knowing whether companies or countries are working on the technology in secret. The best hope is for the world’s leading scientists to collaborate on ways of controlling technology. “I don’t think they should scale this up more until they have understood whether they can control it,” he said as quoted by the NYT story.

In 2012, Hinton and two of his graduate students at the University of Toronto created technology that became the intellectual foundation for the AI systems that the tech industry’s biggest companies believe is a key to their future.

His journey from AI groundbreaker to doomsayer marks a remarkable moment for the technology industry at probably its most important inflection point in decades.

Currently, a new crop of chatbots powered by artificial intelligence has ignited a scramble to determine whether the technology could upend the economics of the internet.

Besides OpenAI’s ChatGPT, Microsoft, OpenAI’s primary investor and partner, has added Bing, a similar chatbot; Google has got Bard; Baidu, China’s major search giant has unveiled Ernie, the world’s No.2 economic power’s major rival to ChatGPT.

As companies improve their AI systems, Hinto believes that they become increasingly dangerous. “Look at how it was five years ago and how it is now,” he said as quoted by NYT. “Take the difference and propagate it forwards. That’s scary.”

However, Google’s chief scientist, Jeff Dean, said in a statement: “We remain committed to a responsible approach to AI. We’re continually learning to understand emerging risks while also innovating boldly.”

-3_1-180x135.jpg)